前言

先前在Windows Docker Desktop 啟用了 Kubernetes , 但因為Windows Kubernetes 只能有單節點,且不是這麼穩定又耗效能,因此,在家搭建一套主流大家在用Linux 版的Kubernetes。

環境說明

- Ubuntu 22.04

- 預先安裝完 Docker

安裝必要軟體

執行以下指令進行系統更新與安裝:

sudo apt-get update sudo apt-get upgrade sudo apt-get install -y net-tools git

- 使用Chrony設定時間同步 Chrony 提供了更快的同步和更高的精度,且資源使用更少。

安裝 Chrony

sudo apt update sudo apt install chrony

修改 Chrony設定

sudo vim /etc/chrony/chrony.conf

pool 0 asia.pool.ntp.org iburst pool 1.pool.ntp.org iburst pool 2.pool.ntp.org iburst pool 3.pool.ntp.org iburst pool 4.pool.ntp.org iburst

重新啟動及測試

sudo systemctl start chrony sudo systemctl enable chrony sudo chronyc -a makestep // 立刻同步時間 chronyc tracking // 檢查 Chrony 同步狀態 chronyc sources -v // 還可以查看連線的 NTP 伺服器列表及其同步狀態 timedatectl status // 確認系統時間狀態

- 關閉防火牆:

# 允許 Kubernetes API server 通信 sudo ufw allow 6443/tcp # Kubernetes API server # etcd 集群通訊 sudo ufw allow 2379/tcp # etcd client communication sudo ufw allow 2380/tcp # etcd server-to-server communication # kubelet API sudo ufw allow 10250/tcp # Kubelet API sudo ufw allow 10255/tcp # Read-only Kubelet API (可選) sudo ufw allow 10248 # Controller manager 和 scheduler sudo ufw allow 10251/tcp # kube-scheduler sudo ufw allow 10252/tcp # kube-controller-manager # Kubernetes DNS (CoreDNS) sudo ufw allow 53/tcp # DNS TCP sudo ufw allow 53/udp # DNS UDP # NodePort services (範圍可自定義,預設 30000-32767) sudo ufw allow 30000 # NodePort services # 啟用防火牆 sudo ufw enable sudo ufw status verbose

- 關閉 OS 的 Swap,避免記憶體不足時關閉容器:

sudo swapoff -a sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

安裝 Kubernetes 主程式

新增核心參數

sudo tee /etc/modules-load.d/containerd.conf <<EOF overlay br_netfilter EOF

sudo modprobe overlay sudo modprobe br_netfilter

sudo tee /etc/sysctl.d/kubernetes.conf <<EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF

載入配置變更:

sudo sysctl --system

安裝 Containerd 運行環境

Containerd 是一個輕量級的容器運行環境,管理容器的啟動、停止等基本功能。

sudo apt install -y curl gnupg2 software-properties-common apt-transport-https ca-certificates sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmour -o /etc/apt/trusted.gpg.d/docker.gpg sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" sudo apt update sudo apt install -y containerd.io containerd config default | sudo tee /etc/containerd/config.toml >/dev/null 2>&1 sudo sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/g' /etc/containerd/config.toml sudo systemctl restart containerd sudo systemctl enable containerd

安裝 Kubernetes 元件

sudo apt-get update # apt-transport-https may be a dummy package; if so, you can skip that package sudo apt-get install -y apt-transport-https ca-certificates curl gpg curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.29/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg # This overwrites any existing configuration in /etc/apt/sources.list.d/kubernetes.list echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.29/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list sudo apt-get update sudo apt-get install -y kubelet kubeadm kubectl sudo apt-mark hold kubelet kubeadm kubectl

初始化 Kubernetes Master 節點

重新載入

sudo systemctl daemon-reload && sudo systemctl restart kubelet sudo sysctl --system

重啟 Docker:

sudo systemctl daemon-reload && sudo systemctl restart docker sudo systemctl enable kubelet && sudo systemctl restart kubelet

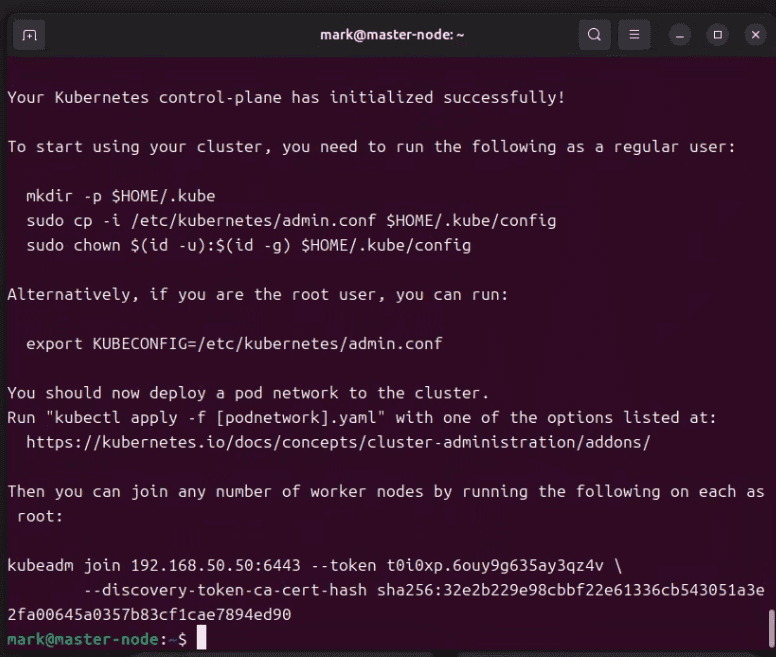

初始化 kubeadm

sudo kubeadm init

初始化成功後,依提提示,複製kubectl設定到使用者:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

安裝 Pod 網路

kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.25.0/manifests/calico.yaml

依據提示,在其他台,執行以下指令可以加入其他 子(Worker) 節點

kubeadm join 192.168.50.50:6443 --token <TOKEN> --discovery-token-ca-cert-hash sha256:<HASH>

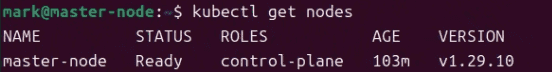

檢查所有節點狀態

kubectl get nodes

檢查節點狀態詳細

kubectl describe node master-node

建立第一個 Kubernetes 容器

準備 Persistent Volume 路徑:

sudo mkdir /var/docker-pvc sudo chmod 777 /var/docker-pvc

部署配置檔 deployment.yaml ( Windows 不用指定Persistent Volumes 位置,但linux 一定要 ex: hostPath: path: /var/docker-pvc)

建立 Kubernetes 部署檔

sudo vim deployment.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: uptime-kuma-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

hostPath:

path: /var/docker-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: uptime-kuma-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

volumeName: uptime-kuma-pv

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: uptime-kuma-deployment

spec:

replicas: 1

selector:

matchLabels:

app: uptime-kuma

template:

metadata:

labels:

app: uptime-kuma

spec:

containers:

- name: uptime-kuma

image: louislam/uptime-kuma:1

ports:

- containerPort: 3001

volumeMounts:

- name: uptime-kuma-volume

mountPath: /app/data

volumes:

- name: uptime-kuma-volume

persistentVolumeClaim:

claimName: uptime-kuma-pvc

---

apiVersion: v1

kind: Service

metadata:

name: uptime-kuma-service

spec:

type: NodePort

selector:

app: uptime-kuma

ports:

- port: 3001

targetPort: 3001

nodePort: 30000

佈署應用

kubectl apply -f ./deployment.yaml

移除控制平面污點 (單節點環境)

kubectl taint nodes --all node-role.kubernetes.io/control-plane-

這條指令主要用於解決 Kubernetes 中控制平面(Control Plane)節點的資源調度問題。預設情況下,Kubernetes 會為控制平面節點(通常是 master 節點)設置「污點」(Taint),以防止一般的工作負載(Pod)被排程到這些節點上。這是為了確保控制平面節點能夠專注於管理集群並保持穩定。

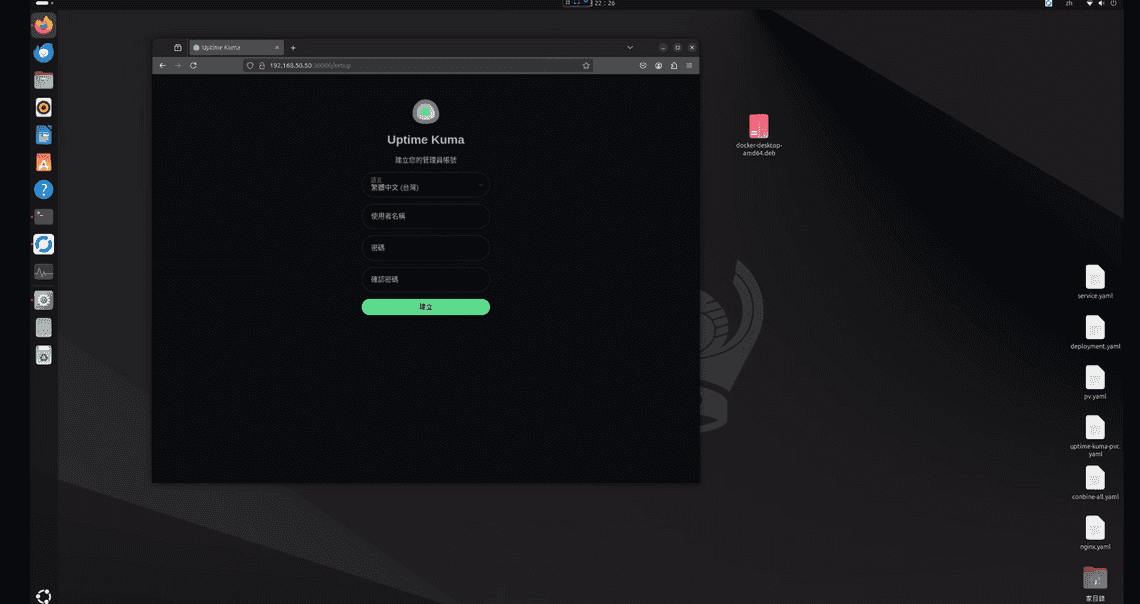

Final Result

此時訪問localhost:30000,就能看到這畫面

補充 - 如果服務安裝失敗或重新安裝 Kubernetes

sudo kubeadm reset -f sudo rm -rf ~/.kube sudo rm -rf /etc/kubernetes /var/lib/etcd /var/lib/kubelet /var/lib/kubeadm /etc/cni /opt/cni sudo systemctl status docker sudo systemctl status containerd sudo apt-get remove --purge -y kubeadm kubectl kubelet kubernetes-cni cri-tools sudo apt-get autoremove -y sudo dpkg --purge kubectl kubeadm kubelet sudo apt-get autoremove --purge -y sudo rm -rf /etc/kubernetes /var/lib/etcd /var/lib/kubelet /etc/cni /opt/cni ~/.kube sudo rm /etc/apt/sources.list.d/kubernetes.list sudo apt-get update